Table of Contents

What Are Web Crawlers And Why They Matter for SEO (2026 Guide)

Web Crawlers is very important in this 21st Century where everyone and their businesses is going into the digital world and wants to be known, seen and heard off,

As someone who is or wants to go digital, You need to understand what this persons and their website is fighting for which is visibility.

But before your pages or content can rank, attract clicks, or bring in revenue, something crucial must happen behind the scenes: web crawlers must discover and understand your website.

These silent internet bots used by Google, Bing, and other search engines are responsible for scanning your pages, interpreting your content, and deciding where you belong in search results.

However in other words, web crawlers are the gatekeepers of SEO (search engine optimization); If they can’t find your site, read your pages, or crawl your content efficiently, your website simply disappears from the search landscape.

But here’s the part most people overlook:

👉Your hosting provider directly determines how well these crawlers can access and index your website.

How to Migrate Your Blog to HarmonWeb — Step-by-Step Guide without making Mistakes

A slow, unstable server can block web crawlers. A fast, optimized server can boost your crawl rate — and your rankings.

That’s why modern, high-performance hosting platforms like HarmonWeb.com are becoming essential for SEO-focused businesses.

With ultra-fast NVMe storage, high uptime, free SSL, and optimized server architecture, HarmonWeb ensures that search bots experience your site the way they should: fast, secure, and fully accessible.

This article breaks down exactly what web crawlers do, why they matter for SEO, and how HarmonWeb gives you a technical advantage in getting crawled, indexed, and ranked faster.

What is a Web Crawlers ?

A web crawlers also called a “spider,” “bot,” or “search crawler” is a software program used by search engines to systematically browse the web, discover content, and build an index of pages.

Here’s how web crawlers work:

- They start with a list of known URLs (called “seed URLs”).

- For each page they visit, they retrieve the HTML content and scan for links. Every link becomes another URL to visit — this expands the “crawl frontier.”

- Crawlers respect instructions from the site owner (via

robots.txt,noindextags, etc.), so you can control what gets indexed. - The crawled content (text, metadata, images, structured data) is stored in a search engine’s index. Later, when users search, the search engine retrieves relevant pages from this index.

Without web crawlers, search engines wouldn’t know your content exists and users wouldn’t find your pages via Google, Bing, or other search engines.

Why Web Crawlers Are Critical for SEO & Website Visibility

1. First Step for Discoverability & Indexing

No crawler → no indexing → no search visibility.

If search crawlers don’t visit your site (or are blocked), your site won’t appear in search results — no matter how good your content is.

2. Content & Metadata Reading

Crawlers pick up your page’s text content, meta titles, descriptions, headings, schema markup, alt-text, internal links

all of which help search engines understand what your page is about and rank it appropriately.

3. Freshness & Updates

Whenever you add or update content, crawlers re-visit and re-index those pages.

Regular crawling ensures search engines show your newest content to users, which is especially important for blogs, news sites, e-commerce, or frequent updates.

4. Crawl Budget & Site Structure Efficiency

Search engines allocate a “crawl budget”: how many pages they crawl on your site in a period. A fast, well-structured website helps crawlers work efficiently ensuring more of your pages get indexed. (HarmonWeb)

If your server responds slowly or has errors, crawlers might skip pages or crawl fewer — hurting your visibility.

5. User Experience & Technical SEO Signals

Crawlers don’t just read content they record metrics like server response time, page load speed, stability, and security (HTTPS).

These technical signals heavily influence SEO rankings.

Poor hosting, slow response times, or downtime → worse crawler experience → worse SEO outcomes.

SEO ranking factors ? How Website Speed Affects SEO and Conversions in 2026

How Hosting Affects Crawling, Indexing & SEO — Why Hosting Matters

Your hosting environment isn’t a “nice to have” — it’s a core SEO factor. Here’s why:

| Hosting Factor | Effect on Crawlers / SEO |

|---|---|

| Server Response Time / Speed | Faster response → crawlers index more pages per visit; improved Core Web Vitals → better rankings. |

| Uptime & Reliability | Constant uptime ensures crawlers can access site anytime — downtime can lead to missed crawls or indexing issues. |

| Resource Allocation & Scalability | With proper resources (SSD/NVMe, CPU, RAM), site handles traffic spikes smoothly — crucial for indexing pages quickly when traffic surges or during large sitemap crawls. |

| Security (HTTPS, SSL, Firewall) | Secure sites signal trust to search engines; crawlers favor HTTPS-enabled sites — essential for ranking and user trust. |

| Geo-Location & Latency | Server location close to target audience reduces latency — better for local SEO and faster user experience. |

Why HarmonWeb Is a Smart Hosting Choice to Maximize Web Crawl & SEO Success

If you want web crawlers to index and rank your site effectively, you need hosting that doesn’t hold you back.

HarmonWeb offers several advantages that directly support crawlability, indexing, and SEO performance:

- NVMe SSD / High-Performance Hosting Infrastructure — ensures fast server response times, optimized load speed, and better handling of crawl requests. (HarmonWeb)

- Reliable Uptime & Stability — provides high availability, reducing risk of downtime during crawler visits.

- Support for Modern SEO Best Practices — SSL inclusion, optimized server stack, consistent performance — all help meet search engine expectations and avoid technical SEO penalties.

- Scalability for Growth — from small blogs to high-traffic sites or e-commerce, HarmonWeb hosting scales so you won’t outgrow server resources when crawlers demand it.

- Better Crawl Budget Utilization — efficient, fast servers allow crawlers to do more per visit — ensuring deeper indexing for large sites. (HarmonWeb)

In short: using HarmonWeb doesn’t just make your website faster or more stable it makes your content more visible and index-friendly, giving you an SEO edge from the ground up.

Best Practices to Make Your Website Crawler-Friendly

To get the most from crawlers (and HarmonWeb), consider these:

Now that you know Search engine crawlers are the gateways between your website and the visibility you earn online.

If you want more traffic, better rankings, and faster indexing, you need to make your site easy for these bots to explore and interpret.

Although it may seem unusual to optimize your site for automated programs rather than humans, it’s a critical part of modern SEO. And it all hinges on two core elements:

1. Crawl Budget Optimization

Every website receives a limited number of crawl attempts. Bots cannot scan your website endlessly—they use server resources, and search engines allocate only a controlled amount of crawling per domain.

If your site loads slowly, contains duplicate pages, or has broken links, you waste your crawl budget. The result?

❌ Important pages might not get crawled

❌New content may take longer to appear in search results

❌ Rankings suffer over time

Your goal is to help crawlers complete their job with minimal friction.

2. Improve Crawler Understanding

Crawlers don’t “read” a page the way humans do. They look for patterns, structural cues, and standardized elements.

Some of what they analyze includes:

- Title tags

- Meta descriptions

- Headings

- Body text

- Image attributes

- Internal/external links

- Structured data

- JavaScript (when possible)

When your content is clearly organized, crawlers decode your page’s purpose quickly—leading to more accurate indexing and better ranking potential.

How to Make Your Website More Crawler-Friendly

Below are the most effective techniques to help search engines understand your content clearly and efficiently:

Use Clean, Well-Structured HTML

HTML is the backbone of the web, and crawlers rely heavily on it to interpret your pages.

Semantic HTML elements give crawlers valuable context. Important tags include:

<header>— signals the beginning of the page<main>— identifies the core content<article>— defines self-contained information<section>— organizes related content<h1>–<h6>— establishes content hierarchy

These tags act like a map, showing search engines how your content is organized and what matters most.

Avoid HTML mistakes such as:

- Skipping heading levels (e.g., jumping from

<h1>to<h4>)

– This disrupts the content hierarchy. - Overusing

<div>elements

–<div>offers no meaning on its own, making your code harder for bots to understand.

Keep Your URL Structure Clean and Meaningful

Your URL is part of your content. It gives both users and crawlers instant context about what a page is about.

Effective URL example:

https://harmonweb.com/hosting/wordpress-hosting

Clear. Organized. Keyword-friendly.

Ineffective URL example:

https://example.com/product.php?id=4532&ref=Zx91q

This offers no context and is easy for crawlers to misinterpret.

Always choose URLs that:

- Are short

- Are descriptive

- Use meaningful words

- Avoid random characters

Use Schema Markup & Limit JavaScript Reliance

Schema Markup

Structured data (like JSON-LD) gives search engines explicit information about the type of content they’re looking at.

Examples include:

- Articles

- Products

- FAQs

- Recipes

- Services

- Events

Adding schema can improve visibility through rich results and featured snippets.

JavaScript Considerations

While search engines can process JavaScript, they don’t always do so reliably.

Some scripts:

- Render too slowly

- Fail to load

- Are skipped entirely

If important content relies on JavaScript, crawlers may miss it. Always ensure essential information is available in HTML.

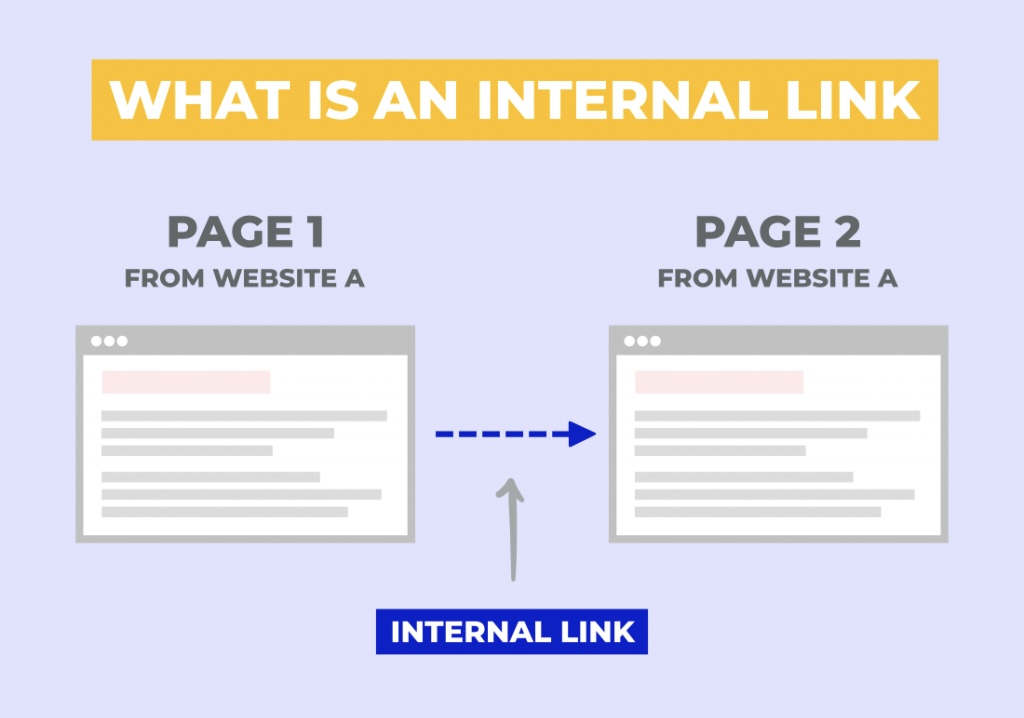

Strengthen Your Internal Linking Strategy

Internal links are one of the strongest signals you can provide to crawlers.

They help bots:

- Discover new pages

- Understand content relationships

- Prioritize your most important pages

Guidelines:

✔ Use descriptive anchor text

✔ Link to key pages regularly

✔Keep link placement natural

✔ Avoid excessive linking (too many links dilute value)

A well-connected site is easier for crawlers to navigate and index.

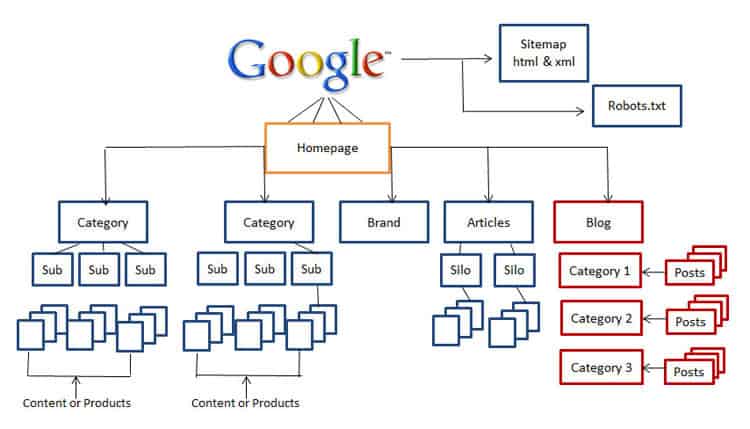

Submit an XML Sitemap

An XML sitemap is essentially a directory of your website specifically designed for search engines.

It helps crawlers:

- Understand your site’s structure

- Discover new pages faster

- Prioritize key areas of your website

You simply create it using a sitemap generator or CMS plugin, then submit it to Google Search Console or Bing Webmaster Tools.

Configure Your robots.txt File Correctly

Your robots.txt file works like a guidebook for crawlers. It tells them:

- Which folders to crawl

- look for Which areas to avoid

- Which bots are allowed or blocked

- Where the XML sitemap is located

Used wisely, this file improves crawl efficiency and protects private directories from being indexed.

(The example you provided has already been converted into an image.)

In Conclusion note that Web Crawlers + Good Hosting = SEO Success

Web crawlers are the gatekeepers of visibility on the internet. They discover, index, and feed your content into search engines giving users a way to find you.

But even the best content can remain invisible if your host slows down the process or limits access.

That’s why web hosting matters just as much as content.

- A good hosting provider ensures your site loads fast, responds reliably, and stays secure.

- A bad host can cripple your SEO regardless of how good your content is.

With a strong, optimized hosting provider like HarmonWeb, you can ensure web crawlers can access, index, and rank your site giving your content the visibility and performance it deserves..